In the previous seven (7) blogs of this series, we have concentrated our observations on the many positive impacts we foresee from the use of Artificial Intelligence (AI) in healthcare; however, we want to bring attention to the fact that it is not all blue sky. This amazing human advancement, as we’ve noted before, comes with cautions. We have seen nearly every technology innovation in the last 50 years and no other technology, until AI, has had such diverse camps, one extoling the unlimited virtues of the technology to another with such dire warnings, and all from well-respected experts.

We are aware there are many dangers for misuse that could cause significant harm – but then, nothing in healthcare is without risk. In this blog, and the one following, we are going to look at some of the potential vulnerabilities of AI in Healthcare, as well as what we recommend, and are doing, to prevent or mitigate these from happening.

We are all acutely aware of the institutional resistance to change in healthcare. Resistance to change can be thought of as inertia, which you recall is the tendency to do nothing or to remain unchanged. Inertia may appear in physical objects or in the minds of people, and in the healthcare industry with a high inertia (high resistance to change), the forces to change must be extremely compelling to implement change. Some have argued that this reluctance to change has the older generation of physicians that were ‘afraid of change’ but this is much less the case and much more the healthcare systems and processes adaptability. The practice of medicine is unique among other industries because a human life can be in balance, and this calls for a much sharper focus on highly structured quality processes to ensure the standard of care required is met. A much more rigid, regulated system that includes deep moral and ethical boundaries, more than in any other segment of our economy.

We have witnessed changes being attempted in healthcare over the years and balance little, if any positive, has resulted. One of the best examples is the Electronic Medical Record (EMR) that is now pushing 30 years, 10 years in earnest. This attempt at driving change in healthcare by pushing technology into the healthcare mainstream has, by many measures, been a total failure for all but the large legacy hospital systems. Escalating costs and physician workload have increased not decreased as hyped. This, combined with increased liability to the physician because of the depth and volume of data, a physician simply cannot absorb it all, yet it is something they’re responsible for. These unaddressed issues have pushed many physicians to abandon the EMR and revert to the old paper file system despite taking a reduced reimbursement from Medicare. Quite simply, we do not need any more great and grandiose ideas slammed into the healthcare system with the expectation of improving the system and bettering the process. The healthcare industry is the wrong place to experiment with solutions looking for a problem. Industry in this country has, in general, proven that simply ‘automating’ existing processes provides minimal value to quality or bottom line, or in this case cost savings and this is certainly true of the EMR debacle in healthcare – costs have increased sharply, not decreased!

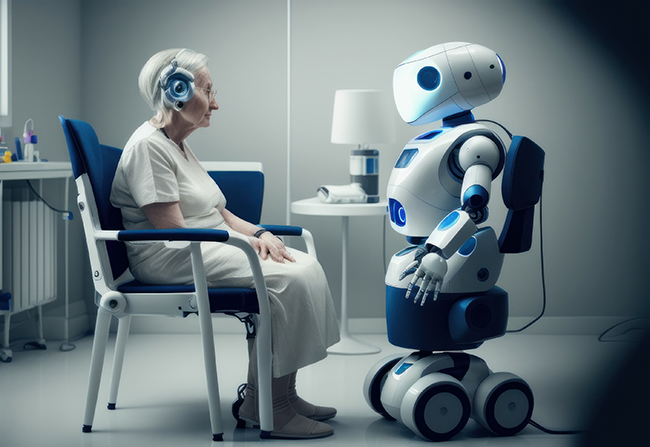

One thing we do not see on the horizon or in our lifetime is an AI program or ‘robot’ replacing the physician. Afterall, even StarTrek® had ‘Bones’ even though they had far sophisticated diagnostics and other medical capabilities – the “Doc” was still needed. Human-to-Human contact, particularly when a person is not well, is very much needed and reassuring, particularly on both ends of the age spectrum geriatrics and pediatrics. So, let’s set aside any ‘dark’ thoughts about AI replacing doctors; however, AI may bring about a restructuring of some jobs and positions in many industries, including healthcare.

Job displacement

One of the most immediate and obvious consequences of AI is the potential for job displacement; however, this has been the case with many technologies we’ve seen, not the least of which has been the internet. As AI systems become more sophisticated, they can automate a wider range of tasks that are currently performed by humans. Once robotics are better perfected, I would expect more widespread disruption in employment, particularly in sectors such as manufacturing, customer service, and transportation – after all, the assembly of most automobiles manufactured by the Big 3 Automakers are already more than 75% automated. Already, companies are designing robotic equipment to perform automobile tire rotation, tire changes, and even repair of flat tires. In healthcare, the use of robotics in the operating theater is already seeing some major gains with use in gynecological, prostate, kidney, colorectal and gallbladder surgeries; however, the uniqueness of the human body from one person to the next presents some interesting challenges – at least if one is concerned about the life of the patient.

In healthcare, it will be the lower end positions most immediately affected. One can foresee a physician practice with a patient reception being a sign-in on an electronic pad (some are already doing this) that alerts both physician and attending nurse that the “2:00 PM patient has arrived.” Then, by using readily available devices, proceed with the ‘intake process’ getting respirations, blood pressure, heart rate, and reason for visit. The AI platform can provide added value by instantly performing a verification that the individual is the patient by facial and voice recognition to simple date-of-birth, check the patients’ medical records and history comparing current complaint with patient history, confirm with the patient the information obtained, check medications compliance and do preliminary workup to be summarized to the physician. In this scenario one would wonder why the intake clinician was needed.

Depending on the type of practice and office procedures this could replace an entry level position and go beyond typical intake adding a records check, verification of insurance coverage and a review of past visits to find the common denominators. In more advanced implementation, the system could perform a full diagnostic workup of the patient for the physician’s review and use. Indeed, even a simple repeat checkup could be performed entirely by the AI platform negating a nurse or trained clinician to collect the data with the added value of the AI platform quickly accessing the background and history of the patient.

Another area potentially at risk for being displaced by an AI platform are the scheduling and billing functions, in fact, the scheduling apps today make having a person do scheduling for the practice is obsolete already. Billing is another area ripe for replacement by an AI program that can decode, crossmatch, and synchronize patient complaint/physician diagnosis, insurance company refusal statistics, review for possible billing anomalies, as well as create a patient bill in minutes instead of hours and with a higher probability of payment all without human intervention, and these billing applications are being worked on as you read this.

A potentially larger impact is the economic, social, and financial consequences associated with potentially creating an access portal for hackers or others with nefarious intent – I categorize these in general as Security Risks.

Security Risks

This brief section cannot possibly cover all types and categories of security risks. AI systems are highly complex, costly to operate, and contain extremely sensitive data that can have far reaching implications for not only patients, but also practices and hospitals. The newness of AI makes them a high-profile target of opportunity for hackers and other malicious actors with the purpose of stealing valuable medical data (asset) in the databases to nefarious intent – causing potential harm to specific individuals or segments of the population the AI program addresses.

There are two aspects to the security risk to AI Healthcare platforms. First, the risk of data theft is no different from the threat that exists today with EMR/EHR platforms. We’ve witnessed dozens of data thefts or data breaches over the years from employees who are careless with passwords or laptops that are lost to direct cyber hacking of the platform to steal the medical records data including sensitive patient information, credit card data, and personal information. The protection from such a cyberattack is being used today in high-security systems.

Our plan is to incorporate a ‘defense-in-depth’ concept involving multiple layers of both hardware/firmware and software to detect, delay and discover to thwart unauthorized access. It is not possible to design and implement a totally secure, hack-proof, bullet-proof computer software platform, and so we must establish mitigating features and warning systems in the event an intrusion is attempted.

The next security risk to the AI Healthcare platform is much more subtle than the first because it could go undiscovered for weeks, months, or even years. This threat is the hacking of the AI algorithms directly, not to obtain the data, but to modify the algorithm such that under certain conditions or with certain triggers the algorithm could do any number of things including a massive data dump, or the alteration of certain protocols. Because the possibilities are quite endless, the protection scheme must be both robust and resilient without adding overhead to the transaction processing.

One concept, in addition to trapping failed attempts at accessing the system, which every healthcare platform should do, is create a secondary, independent, and isolated monitoring program that is constantly performing the equivalent of a Cyclical Redundancy Check on the critical portions of the AI algorithms. Upon detecting any unauthorized changes or anomaly, it either alerts or automatically suspends operation and reports the suspected intrusion.

Another concept would be to implement a blockchain program that holds the algorithm immutable and without proper authorization any difference in the algorithm or platform code, that just as in the previous example, results in an alert, isolation, or even shutdown of the process depending on the severity of the anomaly. These are simple examples and far more novel protection is already on the design table; however, the point is that protecting the AI algorithm is a number one priority to ensure that the platform performs faithfully.

In the next blog, we will review a few more areas of vulnerability that need to be addressed in the design of any AI-Healthcare program. These areas are:

- Biases – We will briefly explore several types of bias that can be inadvertently built into the algorithms and how these can be avoided.

- Accuracy and reliability – One of the biggest concerns about AI in healthcare is its accuracy and reliability.

- Cost – AI-powered tools can be expensive to develop and implement. This could make them unaffordable for some healthcare providers, especially those in rural or underserved areas.

AI offers incredible opportunities for increasing patient access to medical care, improving physician-patient relationship and quality of care, increasing physician practice revenue, and lowering the cost of most forms of medical care – most notably, the primary care areas including pediatrics, OB/GYN, geriatrics and cardiology. AI is a technology that remains in its infancy and its true advantages are yet to be explored, especially in healthcare where, as I’ve noted, there is a high inertia or resistance to any change. Healthcare is one industry where one does not experiment with technology or process changes.

In summary, while there are many advantages to implementing AI, there are also areas needing vigilance, “Caution Areas” let’s call them. Areas in which we must be astute and truly think ahead of the immediate issue to future ramifications of the design being implemented. We’ve explored a couple here and will explore the three (3) more mentioned above in the next blog. The issue is not about AI taking over the world, as in some sci-fi movie, but rather being depended upon too highly for life and death decisions. After all, the algorithms are created by man and man makes errors – which is why an independent self-check mechanism within any AI program relied upon for important decision making must be in place. One of the old and true axioms regarding computers is as true today with AI as ever – “Garbage In / Garbage Out!”

– Carl L. Larsen, President & Chief Operating Officer of OXIO Health, Inc.